The Biodiversity Data Journal (BDJ) became the second open-access peer-reviewed scholarly title to make use of the hosted portals service provided by the Global Biodiversity Information Facility (GBIF): an international network and data infrastructure aimed at providing anyone, anywhere, open access to data about all types of life on Earth.

The Biodiversity Data Journal portal, hosted on the GBIF platform, is to support biodiversity data use and engagement at national, institutional, regional and thematic scales by facilitating access and reuse of data by users with various expertise in data use and management.

Having piloted the GBIF hosted portal solution with arguably the most revolutionary biodiversity journal in its exclusively open-access scholarly portfolio, Pensoft is to soon replicate the effort with at least 20 other journals in the field. This would mean that the publisher will more than double the number of the currently existing GBIF-hosted portals.

As of the time of writing, the BDJ portal provides seamless access and exploration for nearly 300,000 occurrences of biological organisms from all over the world that have been extracted from the journal’s all-time publications. In addition, the portal provides direct access to more than 800 datasets published alongside papers in BDJ, as well as to almost 1,000 citations of the journal articles associated with those publications.

The release of the BDJ portal should inspire other publishers to follow suit in advancing a more interconnected, open and accessible ecosystem for biodiversity research

Vince Smith

Using the search categories featured in the portal, users can narrow their query by geography, location, taxon, IUCN Global Red List Category, geological context and many others. The dashboard also lets users access multiple statistics about the data, and even explore potentially related records with the help of the clustering feature (e.g. a specimen sequenced by another institution or type material deposited at different institutions). Additionally, the BDJ portal provides basic information about the journal itself and links to the news section from its website.

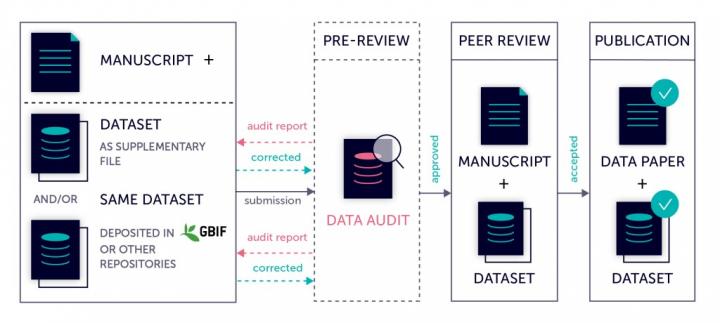

Launched in 2013 with the aim to bring together openly available data and narrative into a peer-reviewed scholarly paper, the Biodiversity Data Journal has remained at the forefront of scholarly publishing in the field of biodiversity research. Over the years, it has been amongst the first to adopt many novelties developed by Pensoft, including the entirely XML-based ARPHA Writing Tool (AWT) that has underpinned the journal’s submission and review process for several years now. Besides the convenience of an entirely online authoring environment, AWT provides multiple integrations with key databases, such as GBIF and BOLD, to allow direct export and import at the authoring stage, thereby further facilitating the publication and dissemination of biodiversity data. More recently, BDJ also piloted the “Nanopublications for Biodiversity” workflow and format as a novel solution to future-proof biodiversity knowledge by sharing “pixels” of machine-actionable scientific statements.

“I am thrilled to see the Biodiversity Data Journal’s (BDJ) hosted portal active, ten years since it became the first journal to submit taxon treatments and Darwin Core occurrence records automatically to GBIF! Since its launch in 2013, BDJ has been unrivalled amongst taxonomy and biodiversity journals in its unique workflows that provide authors with import and export functions for structured biodiversity data to/from GBIF, BOLD, iDigBio and more. I am also glad to announce that more than 30 Pensoft biodiversity journals will soon be present as separate hosted portals on GBIF thanks to our long-time collaboration with Plazi, ensuring proper publication, dissemination and re-use of FAIR biodiversity data,” said Prof. Dr. Lyubomir Penev, founder and CEO of Pensoft, and founding editor of BDJ.

“The release of the BDJ portal and subsequent ones planned for other Pensoft journals should inspire other publishers to follow suit in advancing a more interconnected, open and accessible ecosystem for biodiversity research,” said Vince Smith, editor-in-chief of BDJ and head of digital, data and informatics at the Natural History Museum, London.